想在本地用單張角色立繪,生成「同一角色、不同動作」的短篇角色動畫?這篇帶你用 ComfyUI 提供且經由站長我簡單改造的 HunyuanVideo 1.5 Image-to-Video 工作流,在 ComfyUI 裡完成以圖生影+角色外觀一致的動畫生成流程:給模型一張角色圖,再用中文提示詞描述動作與表情,就能輸出約任意長度的角色動態影片。

HunyuanVideo-1.5(騰訊混元Video-1.5)是一款以約 8.3B 參數構成的輕量級影片生成模型,但雖然模型體積小巧,其在畫質細節、動作連貫性與整體視覺表現上卻能逼近旗艦級別的結果。它在設計時特別強調效能與可用性,針對消費級 GPU 進行了深度優化,使得一般創作者或開發者也能在單張顯卡環境中完成高效率推理,不必依賴大型伺服器或昂貴硬體。模型同時支援 T2V(文生影) 與 I2V(圖生影) 兩種常見模式,讓使用者不論是從零構思畫面,或希望替靜態圖補足動態效果,都能依需求選擇合適流程。這次示範將會以 I2V 模式進行。

本次實測環境為:

- 顯示卡:RTX 5070 Ti 16GB VRAM

- 記憶體:64GB RAM

- 影片解析度:496×720(縱向 720P)

- 每部影片長度:約 121 幀@24 FPS ≒ 5 秒

- 實際生成時間:約 300 秒生成 5 秒影片,也就是平均每 1 秒影片 ≒ 1 分鐘生成時間

本文提供可直接匯入的 ComfyUI 工作流與相關參數說明,並在最後實測五組中文提示詞。

環境配置與模型放置

請先完成 ComfyUI 的基本安裝並能順利啟動介面(教學點我)。接著,需要下載並放置 HunyuanVideo 1.5 所需的模型與編碼器。

HunyuanVideo 1.5 的影像生成流程仰賴於幾個關鍵模型與編碼器:Diffusion(UNet 模型)是核心組件,採用 720P Image-to-Video 推理模型,專門負責生成整段影片的內容與動作。VAE 則使用專門的 3D VAE 權重,其作用是將潛空間特徵(Latent Features)解碼成多幀影像,並同時在空間和時間維度上進行高效壓縮。在文本理解方面,模型透過 DualCLIPLoader 載入 Qwen 2.5 VL 與 ByT5 作為 Text Encoders,賦予其直接理解中文和英文自然語句描述提示詞的能力。最後,為了保持影片中角色外觀的穩定與一致性,系統使用 sigclip_vision_patch14_384 搭配 CLIPVisionEncode 來處理角色立繪,將其轉換成視覺特徵,作為保持角色特徵統一性的關鍵輸入。

模型與路徑

- Diffusion(UNet):

hunyuanvideo1.5_720p_i2v_cfg_distilled_fp8_scaled.safetensors[🔗 下載點]

📁 放置 →ComfyUI/models/diffusion_models/ - VAE:

hunyuanvideo15_vae_fp16.safetensors[🔗 下載點]

📁 放置 →ComfyUI/models/vae/ - Text Encoders:

qwen_2.5_vl_7b_fp8_scaled.safetensors、byt5_small_glyphxl_fp16.safetensors[🔗 下載點]

📁 放置 →ComfyUI/models/text_encoders/ - CLIP Vision:

sigclip_vision_patch14_384.safetensors[🔗 下載點]

📁 放置 →ComfyUI/models/clip_vision/

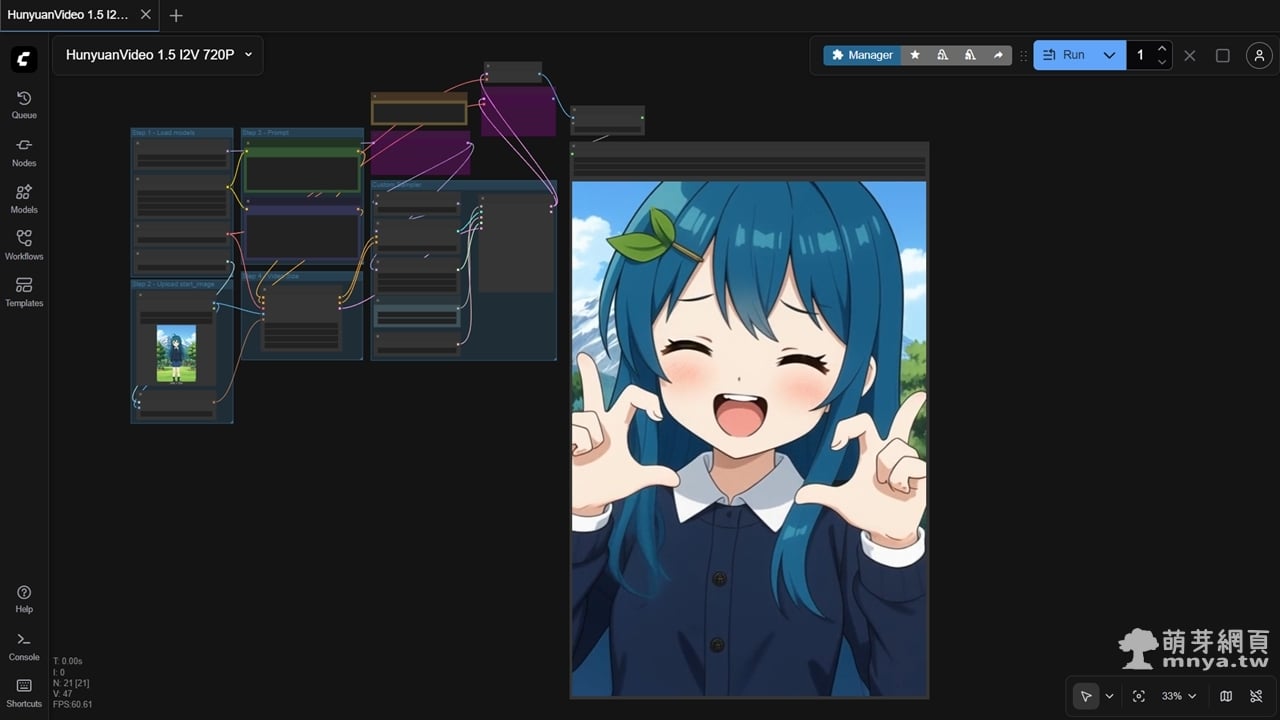

工作流重點與參數設計

這次的工作流將「載入模型 → 角色立繪 → 中文提示詞 → 影片生成 → 匯出」整理成易懂的四步驟:

- Step 1 – 載入模型(Step 1 - Load models)

- Step 2 – 匯入起始影像(Step 2 - Upload start_image)

- Step 3 – 輸入提示詞(Step 3 - Prompt)

- Step 4 – 設定影片尺寸與長度(Step 4 - Video Size)

以下依區塊說明重點。

Step 1:載入模型與加速節點

- UNETLoader:載入 HunyuanVideo 1.5 I2V 的主模型權重,決定影片的整體畫風與動作表現。

- VAELoader:載入 HunyuanVideo 專用 VAE,請勿隨意換成其他 VAE,以避免尺寸或時間壓縮不相容的問題。

- DualCLIPLoader:同時載入 Qwen 2.5 VL 與 ByT5,可直接用中文描述動作、表情與鏡頭感。

- CLIPVisionLoader:為後續的

CLIPVisionEncode提供權重,負責把參考圖轉成視覺語意。 - EasyCache:選用的加速節點。啟用後可以明顯縮短生成時間,但會略微犧牲畫面細節與穩定度。工作流中已有備註,可用 Ctrl + B 快速開關。

Step 2:匯入起始角色圖(以圖生影的基礎)

在 Step 2 - Upload start_image 群組中,你會看到:

- LoadImage:載入作為參考的角色立繪或插畫,作為起始幀。

- CLIPVisionEncode:透過 CLIP Vision 將這張圖編碼成特徵向量,輸入到

HunyuanVideo15ImageToVideo。

角色一致性的核心就來自這裡:HunyuanVideo 會根據這張起始圖,維持角色的髮色、臉型、服裝與整體風格,接著才依照文字提示讓角色動起來。

Step 3:中文提示詞(Positive / Negative)

在 Step 3 - Prompt 群組,你可以直接輸入中文句子:

- CLIP Text Encode(Positive Prompt):

這裡用自然語言描述動作、表情、鏡頭感與氛圍,例如:「對著鏡頭比讚、充滿自信」、「走路時微風吹動頭髮、背景有柔和景深」等。 - CLIP Text Encode(Negative Prompt):

可選擇填入你不想出現的元素,例如「畫面抖動、臉部變形、多餘肢體、畫質模糊」等;若懶得調校,可以先留空測試。

HunyuanVideo 1.5 的文本模組本身就對中文優化良好,無需特殊 tag 語法,直接寫情境與動作即可。

Step 4:影片解析度與長度設定

在 Step 4 - Video Size 群組中,由 HunyuanVideo15ImageToVideo 節點負責控制:

- 寬度 / 高度:本次設定為 496 × 720,接近直向 720P,對 16GB VRAM 來說相對穩定且快速。

- 幀數(Frames):設定為 121 幀,之後以 24 FPS 合成影片,單支約 5 秒。

- Batch Size:保持 1,方便控制記憶體使用量。

取樣器與影片輸出

後半段的 Custom Sampler 區塊則負責取樣與解碼:

- ModelSamplingSD3:將模型包裝成 SD3 取樣流程可用的結構。

- CFGGuider:接收正向/反向條件,這裡 CFG Scale 設為 1,I2V 模式下過高 CFG 反而容易帶來抖動。

- BasicScheduler:排程模式為

simple,步數 20。 - KSamplerSelect:使用

euler取樣器。 - RandomNoise:固定種子可重現同一個結果,改用隨機則能在同指令下產生略有差異的版本。

- SamplerCustomAdvanced:執行完整多步驟去噪,將潛空間結果送往 VAE 解碼節點。

- VAEDecode / VAEDecodeTiled:負責將潛空間還原為逐幀影像。

- CreateVideo:以 24 FPS 合成影格。

- SaveVideo:輸出為 H.264 編碼 mp4,儲存於指定位址(例如

HunyuanVideo1.5/ComfyUI)。

生成時間與效能觀察

在 RTX 5070 Ti 16GB、496×720、121 幀、20 步的設定下,本次實測結果為:

- 約 5 秒影片 ≒ 300 秒生成時間

- 換算起來大約是「每 1 秒影片 ≒ 1 分鐘生成」

以本地單卡、720P I2V 來說,這樣的速度在可接受範圍內:適合用來做角色動作草稿、PV 構圖或 VTuber 開場動畫等。

📝 工作流(HunyuanVideo 1.5 I2V 720P.json)

將下列內容複製到文字編輯器,儲存為 HunyuanVideo 1.5 I2V 720P.json 後即可在 ComfyUI 匯入。

{"id":"ecc20583-98c5-4707-83f4-631b49a2bf0b","revision":0,"last_node_id":142,"last_link_id":349,"nodes":[{"id":81,"type":"CLIPVisionLoader","pos":[-620,450],"size":[350,58],"flags":{},"order":0,"mode":0,"inputs":[],"outputs":[{"name":"CLIP_VISION","type":"CLIP_VISION","links":[225]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"CLIPVisionLoader","models":[{"name":"sigclip_vision_patch14_384.safetensors","url":"https://huggingface.co/Comfy-Org/sigclip_vision_384/resolve/main/sigclip_vision_patch14_384.safetensors","directory":"clip_vision"}]},"widgets_values":["sigclip_vision_patch14_384.safetensors"]},{"id":11,"type":"DualCLIPLoader","pos":[-620,180],"size":[350,130],"flags":{},"order":1,"mode":0,"inputs":[],"outputs":[{"name":"CLIP","type":"CLIP","slot_index":0,"links":[205,240]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"DualCLIPLoader","models":[{"name":"qwen_2.5_vl_7b_fp8_scaled.safetensors","url":"https://huggingface.co/Comfy-Org/HunyuanVideo_1.5_repackaged/resolve/main/split_files/text_encoders/qwen_2.5_vl_7b_fp8_scaled.safetensors","directory":"text_encoders"},{"name":"byt5_small_glyphxl_fp16.safetensors","url":"https://huggingface.co/Comfy-Org/HunyuanVideo_1.5_repackaged/resolve/main/split_files/text_encoders/byt5_small_glyphxl_fp16.safetensors","directory":"text_encoders"}]},"widgets_values":["qwen_2.5_vl_7b_fp8_scaled.safetensors","byt5_small_glyphxl_fp16.safetensors","hunyuan_video_15","default"]},{"id":79,"type":"CLIPVisionEncode","pos":[-610,960],"size":[290.390625,78],"flags":{},"order":10,"mode":0,"inputs":[{"name":"clip_vision","type":"CLIP_VISION","link":225},{"name":"image","type":"IMAGE","link":219}],"outputs":[{"name":"CLIP_VISION_OUTPUT","type":"CLIP_VISION_OUTPUT","links":[217]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"CLIPVisionEncode"},"widgets_values":["center"]},{"id":10,"type":"VAELoader","pos":[-620,350],"size":[350,60],"flags":{},"order":2,"mode":0,"inputs":[],"outputs":[{"name":"VAE","type":"VAE","slot_index":0,"links":[206,224,306]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"VAELoader","models":[{"name":"hunyuanvideo15_vae_fp16.safetensors","url":"https://huggingface.co/Comfy-Org/HunyuanVideo_1.5_repackaged/resolve/main/split_files/vae/hunyuanvideo15_vae_fp16.safetensors","directory":"vae"}]},"widgets_values":["hunyuanvideo15_vae_fp16.safetensors"]},{"id":80,"type":"LoadImage","pos":[-610,600],"size":[290.390625,314.00000000000006],"flags":{},"order":3,"mode":0,"inputs":[],"outputs":[{"name":"IMAGE","type":"IMAGE","links":[218,219]},{"name":"MASK","type":"MASK","links":null}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"LoadImage"},"widgets_values":["Generated Image December 02, 2025 - 12_12AM.jpg","image"]},{"id":120,"type":"VAEDecodeTiled","pos":[640,-140],"size":[270,150],"flags":{},"order":18,"mode":4,"inputs":[{"name":"samples","type":"LATENT","link":322},{"name":"vae","type":"VAE","link":306}],"outputs":[{"name":"IMAGE","type":"IMAGE","links":[]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.70","Node name for S&R":"VAEDecodeTiled"},"widgets_values":[512,64,64,4096]},{"id":126,"type":"BasicScheduler","pos":[250,480],"size":[315,106],"flags":{},"order":13,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":316}],"outputs":[{"name":"SIGMAS","type":"SIGMAS","links":[313]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"BasicScheduler"},"widgets_values":["simple",20,1]},{"id":127,"type":"RandomNoise","pos":[250,620],"size":[315,82],"flags":{},"order":4,"mode":0,"inputs":[],"outputs":[{"name":"NOISE","type":"NOISE","links":[310]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"RandomNoise"},"widgets_values":[887963123424675,"fixed"],"color":"#2a363b","bgcolor":"#3f5159"},{"id":128,"type":"KSamplerSelect","pos":[250,750],"size":[315,58],"flags":{},"order":5,"mode":0,"inputs":[],"outputs":[{"name":"SAMPLER","type":"SAMPLER","links":[312]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"KSamplerSelect"},"widgets_values":["euler"]},{"id":130,"type":"ModelSamplingSD3","pos":[250,240],"size":[315,58],"flags":{},"order":14,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":317}],"outputs":[{"name":"MODEL","type":"MODEL","slot_index":0,"links":[314]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"ModelSamplingSD3"},"widgets_values":[7]},{"id":125,"type":"SamplerCustomAdvanced","pos":[630,250],"size":[272.3617858886719,326],"flags":{},"order":16,"mode":0,"inputs":[{"name":"noise","type":"NOISE","link":310},{"name":"guider","type":"GUIDER","link":311},{"name":"sampler","type":"SAMPLER","link":312},{"name":"sigmas","type":"SIGMAS","link":313},{"name":"latent_image","type":"LATENT","link":315}],"outputs":[{"name":"output","type":"LATENT","slot_index":0,"links":[320,322]},{"name":"denoised_output","type":"LATENT","links":[]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"SamplerCustomAdvanced"},"widgets_values":[]},{"id":105,"type":"EasyCache","pos":[240,20],"size":[360,130],"flags":{},"order":11,"mode":4,"inputs":[{"name":"model","type":"MODEL","link":270}],"outputs":[{"name":"MODEL","type":"MODEL","links":[316,317]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"EasyCache"},"widgets_values":[0.2,0.15,0.95,false]},{"id":8,"type":"VAEDecode","pos":[650,-230],"size":[210,46],"flags":{},"order":17,"mode":0,"inputs":[{"name":"samples","type":"LATENT","link":320},{"name":"vae","type":"VAE","link":206}],"outputs":[{"name":"IMAGE","type":"IMAGE","slot_index":0,"links":[308]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"VAEDecode"},"widgets_values":[]},{"id":101,"type":"CreateVideo","pos":[962.7429541526486,-71.1463354577958],"size":[270,78],"flags":{},"order":19,"mode":0,"inputs":[{"name":"images","type":"IMAGE","link":308},{"name":"audio","shape":7,"type":"AUDIO","link":null}],"outputs":[{"name":"VIDEO","type":"VIDEO","links":[349]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"CreateVideo"},"widgets_values":[24]},{"id":12,"type":"UNETLoader","pos":[-620,50],"size":[350,82],"flags":{},"order":6,"mode":0,"inputs":[],"outputs":[{"name":"MODEL","type":"MODEL","slot_index":0,"links":[270]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"UNETLoader","models":[{"name":"hunyuanvideo1.5_720p_i2v_fp16.safetensors","url":"https://huggingface.co/Comfy-Org/HunyuanVideo_1.5_repackaged/resolve/main/split_files/diffusion_models/hunyuanvideo1.5_720p_i2v_fp16.safetensors","directory":"diffusion_models"}]},"widgets_values":["hunyuanvideo1.5_720p_i2v_cfg_distilled_fp8_scaled.safetensors","default"]},{"id":93,"type":"CLIPTextEncode","pos":[-220,260],"size":[422.84503173828125,200],"flags":{},"order":9,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":240}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","links":[246]}],"title":"CLIP Text Encode (Negative Prompt)","properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"CLIPTextEncode"},"widgets_values":[""],"color":"#223","bgcolor":"#335"},{"id":78,"type":"HunyuanVideo15ImageToVideo","pos":[-160,580],"size":[296.3169921875,210],"flags":{},"order":12,"mode":0,"inputs":[{"name":"positive","type":"CONDITIONING","link":222},{"name":"negative","type":"CONDITIONING","link":246},{"name":"vae","type":"VAE","link":224},{"name":"start_image","shape":7,"type":"IMAGE","link":218},{"name":"clip_vision_output","shape":7,"type":"CLIP_VISION_OUTPUT","link":217}],"outputs":[{"name":"positive","type":"CONDITIONING","links":[318]},{"name":"negative","type":"CONDITIONING","links":[319]},{"name":"latent","type":"LATENT","links":[315]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"HunyuanVideo15ImageToVideo"},"widgets_values":[496,720,121,1]},{"id":129,"type":"CFGGuider","pos":[250,340],"size":[315,98],"flags":{},"order":15,"mode":0,"inputs":[{"name":"model","type":"MODEL","link":314},{"name":"positive","type":"CONDITIONING","link":318},{"name":"negative","type":"CONDITIONING","link":319}],"outputs":[{"name":"GUIDER","type":"GUIDER","links":[311]}],"properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"CFGGuider"},"widgets_values":[1]},{"id":121,"type":"Note","pos":[240,-120],"size":[350,90],"flags":{},"order":7,"mode":0,"inputs":[],"outputs":[],"properties":{},"widgets_values":["EasyCache can speed up this workflow, but it will also sacrifice the video quality. If you need it, use Ctrl+B to enable."],"color":"#432","bgcolor":"#653"},{"id":44,"type":"CLIPTextEncode","pos":[-220,50],"size":[422.84503173828125,164.31304931640625],"flags":{},"order":8,"mode":0,"inputs":[{"name":"clip","type":"CLIP","link":205}],"outputs":[{"name":"CONDITIONING","type":"CONDITIONING","slot_index":0,"links":[222]}],"title":"CLIP Text Encode (Positive Prompt)","properties":{"cnr_id":"comfy-core","ver":"0.3.68","Node name for S&R":"CLIPTextEncode"},"widgets_values":["保持角色外觀一致,對著鏡頭比讚,充滿自信的表情"],"color":"#232","bgcolor":"#353"},{"id":142,"type":"SaveVideo","pos":[960,60],"size":[270,489.93548387096774],"flags":{},"order":20,"mode":0,"inputs":[{"name":"video","type":"VIDEO","link":349}],"outputs":[],"properties":{"cnr_id":"comfy-core","ver":"0.3.76"},"widgets_values":["HunyuanVideo1.5/ComfyUI","auto","h264"]}],"links":[[205,11,0,44,0,"CLIP"],[206,10,0,8,1,"VAE"],[217,79,0,78,4,"CLIP_VISION_OUTPUT"],[218,80,0,78,3,"IMAGE"],[219,80,0,79,1,"IMAGE"],[222,44,0,78,0,"CONDITIONING"],[224,10,0,78,2,"VAE"],[225,81,0,79,0,"CLIP_VISION"],[240,11,0,93,0,"CLIP"],[246,93,0,78,1,"CONDITIONING"],[270,12,0,105,0,"MODEL"],[306,10,0,120,1,"VAE"],[308,8,0,101,0,"IMAGE"],[310,127,0,125,0,"NOISE"],[311,129,0,125,1,"GUIDER"],[312,128,0,125,2,"SAMPLER"],[313,126,0,125,3,"SIGMAS"],[314,130,0,129,0,"MODEL"],[315,78,2,125,4,"LATENT"],[316,105,0,126,0,"MODEL"],[317,105,0,130,0,"MODEL"],[318,78,0,129,1,"CONDITIONING"],[319,78,1,129,2,"CONDITIONING"],[320,125,0,8,0,"LATENT"],[322,125,0,120,0,"LATENT"],[349,101,0,142,0,"VIDEO"]],"groups":[{"id":1,"title":"Step 1 - Load models","bounding":[-630,-20,370,539.6],"color":"#3f789e","font_size":24,"flags":{}},{"id":2,"title":"Step 3 - Prompt","bounding":[-230,-20,442.84503173828125,493.6],"color":"#3f789e","font_size":24,"flags":{}},{"id":3,"title":"Step 2 - Upload start_image","bounding":[-630,530,370,520],"color":"#3f789e","font_size":24,"flags":{}},{"id":4,"title":"Step 4 - Video Size","bounding":[-230,500,440,320],"color":"#3f789e","font_size":24,"flags":{}},{"id":9,"title":"Custom Sampler","bounding":[240,170,672.3617858886719,651.6],"color":"#3f789e","font_size":24,"flags":{}}],"config":{},"extra":{"ds":{"scale":0.6452951493757959,"offset":[836.130955916922,250.39046438289876]},"frontendVersion":"1.32.10","groupNodes":{},"VHS_latentpreview":false,"VHS_latentpreviewrate":0,"VHS_MetadataImage":true,"VHS_KeepIntermediate":true,"workflowRendererVersion":"LG"},"version":0.4}匯入工作流後若提示遺失節點,建議使用 ComfyUI-Manager 搜尋安裝相關套件。安裝完成後務必重啟 ComfyUI,節點才會出現在清單中。

▲ ComfyUI 的工作流截圖。

提示詞示範與生成成果(以圖生影)

接下來的測試全部使用同一張角色立繪作為起始影像,並分別套用五組中文提示詞,讓角色在不同動作與表情下保持外觀一致。

本次使用的提示詞如下:

1. 保持角色外觀一致,讓人物自然向前走,微風輕吹頭髮,帶有柔和的景深效果

2. 保持角色外觀一致,讓人物在身前比出愛心手勢,並做出可愛的表情

3. 保持角色外觀一致,對著鏡頭比讚,充滿自信的表情

4. 保持角色外觀一致,讓人物漂亮的做一個轉身動作,最後向鏡頭開心揮手

5. 保持角色外觀一致,讓人物蹲下後雙手比YA

實際成果方面,我把五段影片剪輯成單一部影片,依序展示五種動作效果,你可以一口氣觀看完整角色動作表現:

大致上表現得很不錯!但仍可以見到比較明顯的錯誤,「第四個提示詞」沒有做到轉身的動作,還有「第五個提示詞」比的 YA 怪怪的。

結語

HunyuanVideo 1.5 把「高品質影片生成」這件事從資料中心拉回了個人電腦:以約 8.3B 參數的輕量級架構,達成接近 SOTA 的畫面品質與動作連貫性,並能在單張消費級顯卡上順利推理,對個人創作者與小型團隊來說,實用價值非常高。在這樣的設計下,角色外觀一致性由起始圖與 CLIP Vision 負責穩固,動作與表情變化則交給中文提示詞控制。你可以像寫分鏡腳本一樣,一條一條定義角色動作,快速建立一整套角色動態素材庫。唯一可惜的部分是沒有順便產生背景音樂或音效。

對插畫師、同人創作者、VTuber、以及需要大量角色動態草圖的專案而言,「ComfyUI x HunyuanVideo 1.5 I2V」是一條在家用顯卡就能負擔的高效率工作流:在約 16GB VRAM 的環境裡,穩定輸出 720P 角色動畫,兼顧速度、品質與可控度,是非常值得納入日常創作流程的工具組合。

《上一篇》CrystalDiskInfo:停用 APM 減少硬碟啟停的完整設定教學

《上一篇》CrystalDiskInfo:停用 APM 減少硬碟啟停的完整設定教學  《下一篇》透過 ASUS EZ Flash 3 更新 ASUS 主機板 BIOS 版本教學

《下一篇》透過 ASUS EZ Flash 3 更新 ASUS 主機板 BIOS 版本教學

留言區 / Comments

萌芽論壇