我們在大型模型中輸入提詞(Prompt)以產生圖片,這些大型模型是用大量實驗室等級的電腦運算出來的,動輒數 GB 大小,如:Stable Diffusion、Waifu Diffusion,但是運用期間萬一遇上這些模型不認識的物品或角色怎麼辦?這時候就要用到 Stable Diffusion web UI 訓練小模型的功能了!所謂的小模型就是用戶可以基於大型模型,用少量的素材樣本進行訓練,最後可輸出一些原來無法輸出的圖。目前可自行訓練的小模型有兩種,分別是 embedding(Textual Inversion)和 hypernetwork,模型空間占用是前者較小後者較大,前者適合學習單個物體、角色及簡易的畫風,後者則是適合學習整體畫風用。

這次主要來做訓練小模型 embedding (Textual Inversion) 的教學,首先您電腦需要有非常大的 GPU VRAM 可以用(建議 > 10GB),訓練模型非常吃 VRAM,因此如果您準備添購顯示卡來玩 AI,甜品卡 RTX 3060 12G VRAM 會是不錯的選擇。之前試過用 NovelAI 跟 Anything 混合模型生成東方角色秦心是不太行的(就很不像!),因此想利用自行訓練 embedding 的方式試著讓 AI 畫出正確的秦心!首先要準備一些要進行訓練的素材樣本,在網路上可以抓到一些品質不錯的公開作品,請裁切為 1:1 並建議為 512 x 512 px 的解析度,這邊可用喜歡的軟體或線上工具 Birme 進行,然後建立一個資源集的路徑,可參考我的設置方式:

D:\ai\source -> 原始素材樣本放置區(來源目錄)

D:\ai\destination -> 預處理素材樣本放置區(目標目錄)

※ 備註:建議原始素材樣本要有 30 張或以上,若為角色請只有單人(排除多人),品質高比數量多來的重要。此次因為原始素材樣本過少,看來訓練結果不理想,不過教學步驟是OK的,希望大家都能訓練到好模型。

接著就是打開 Stable Diffusion web UI 開始以下步驟。

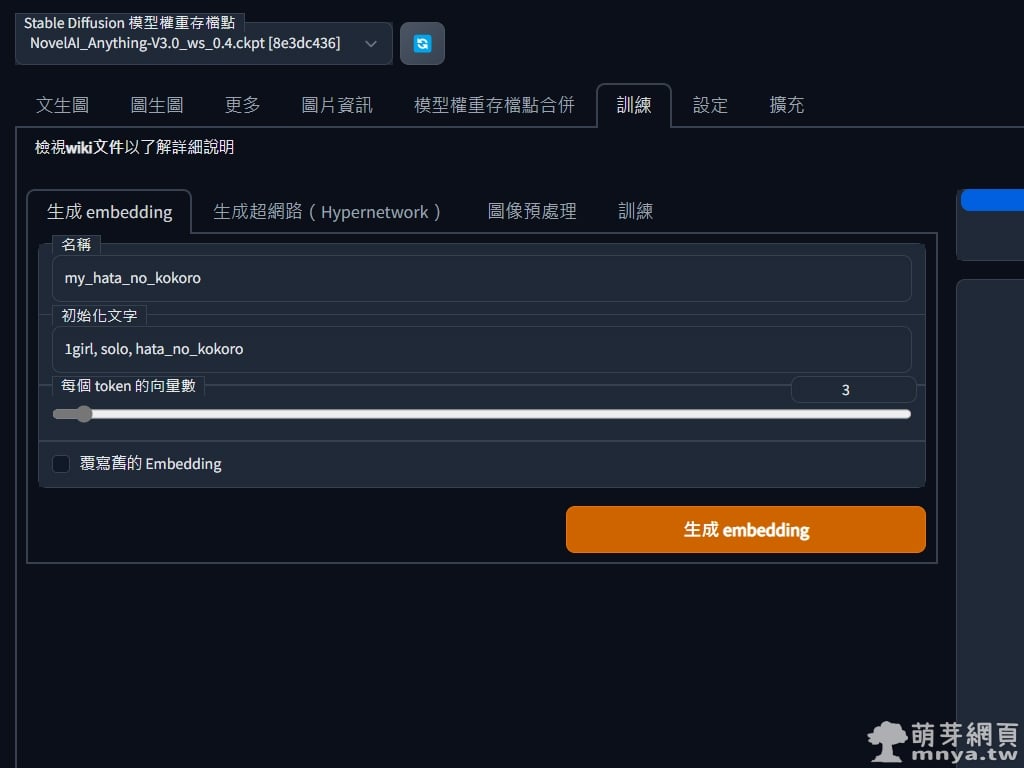

▲ 進入介面,上方「Stable Diffusion 模型權重存檔點」選取基礎的大型模型以利後續訓練小模型用。選到「訓練」頁籤,進入「生成 embedding」,我們要在這邊建立新的 embedding,名稱請取不會跟現有提詞混淆的,如:my_hata_no_kokoro,初始文字即初始提詞,可從大型模型中加強訓練的提詞,這邊輸入 1girl, solo, hata_no_kokoro,這裡似乎一定程度影響小模型的好壞程度,每個 token 的向量數依照您現有的素材量,不多就數字低些即可(建議 3~5 ),好了就點「生成 embedding」,右側會有生成成功提示:

Created: D:\stable-diffusion-webui\embeddings\my_hata_no_kokoro.pt

▲ 來到「圖像預處理」的部分,這邊要將來源目錄跟目標目錄指定好,這邊主要是做原始素材樣本的預處理,寬高請跟您處理過後的解析度一樣,一定要為 1:1 的比例!勾選「生成鏡像副本」會將原始圖左右水平翻轉產生兩倍樣本,再勾選「使用 deepbooru 生成說明文字(標記)」以產生相關提詞(這邊是針對二次元角色才勾這個,要先安裝這個外掛才能用),點「預處理」開始跑!

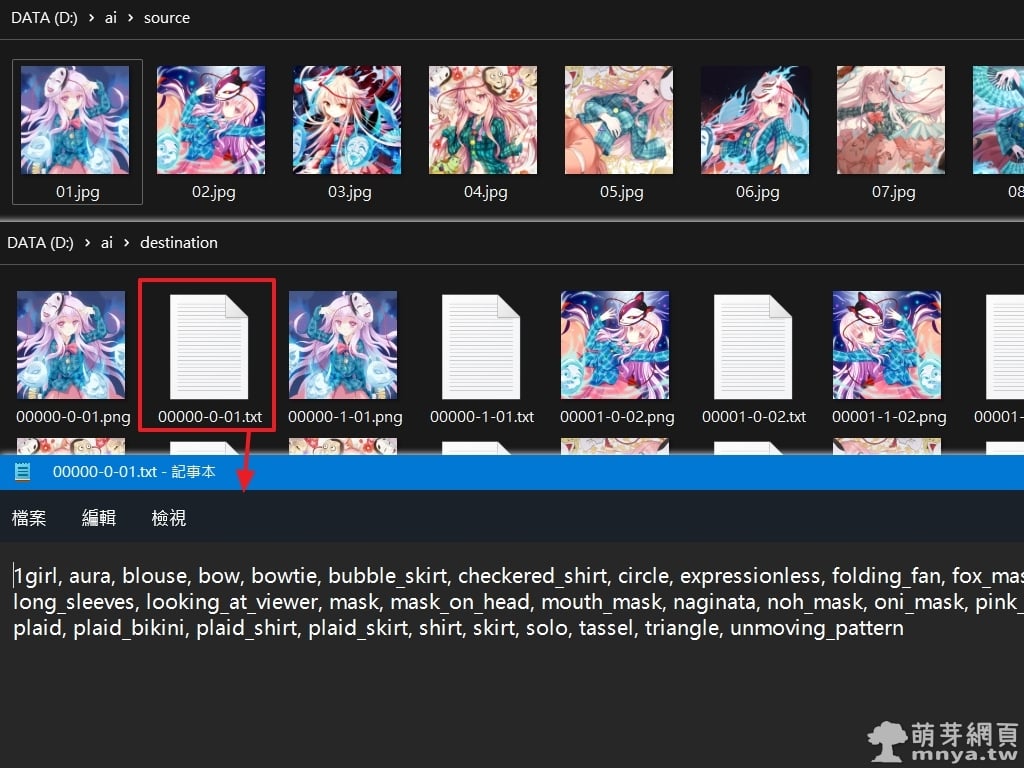

▲ 預處理完後可以在指定目錄中看到相關檔案,軟體已經自動生成每張圖可能的對應提詞了!

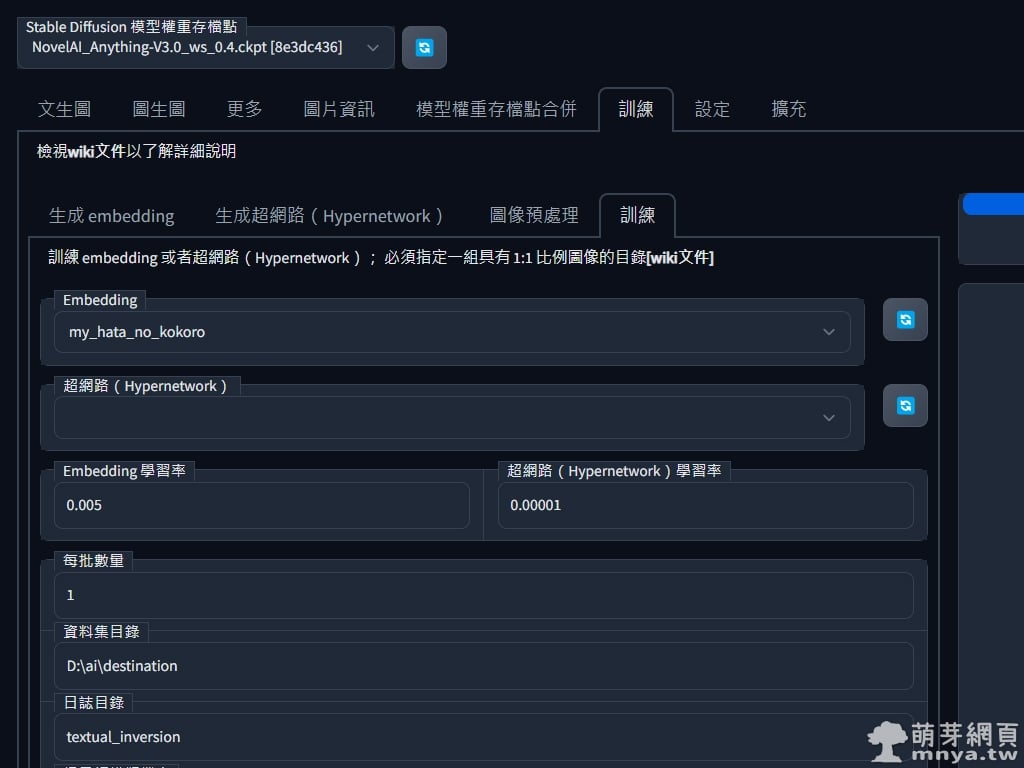

▲ 最後來到「訓練」,Embedding 選剛剛建立的,其餘參數就先不動,資料集目錄填入預處理完的路徑(我是 D:\ai\destination),日誌目錄就用預設的即可,提示詞模版檔案很重要,這邊針對角色或風格要用不同的檔案,角色用「subject_filewords.txt」、風格用「style_filewords.txt」,稍後會說明。

▲ 寬高一樣以樣本的寬高為主,這邊最大疊代步數可以設一萬就好,Embedding 訓練步數不用太多,然後就用預設每 500 步生成一個小模型跟預覽圖即可!最後點「訓練 Embedding」開始訓練。

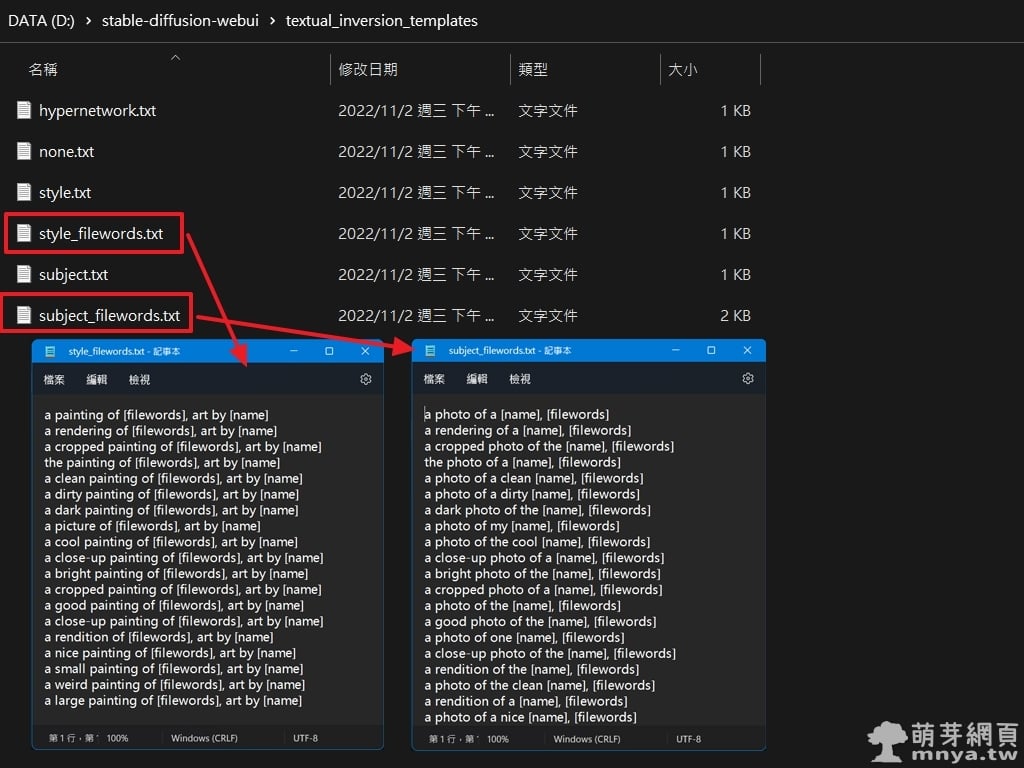

▲ 在軟體根目錄中的 textual_inversion_templates,可以看到用來訓練小模型用的提示詞模版檔案,角色用「subject_filewords.txt」、風格用「style_filewords.txt」,到時候輸入提詞就是要用模板裡的方式輸入,我這邊是以「subject_filewords.txt」去訓練的,因此到時候要用此小模型就是輸入 a photo of a my_hata_no_kokoro, [...更多提詞] 來出圖!

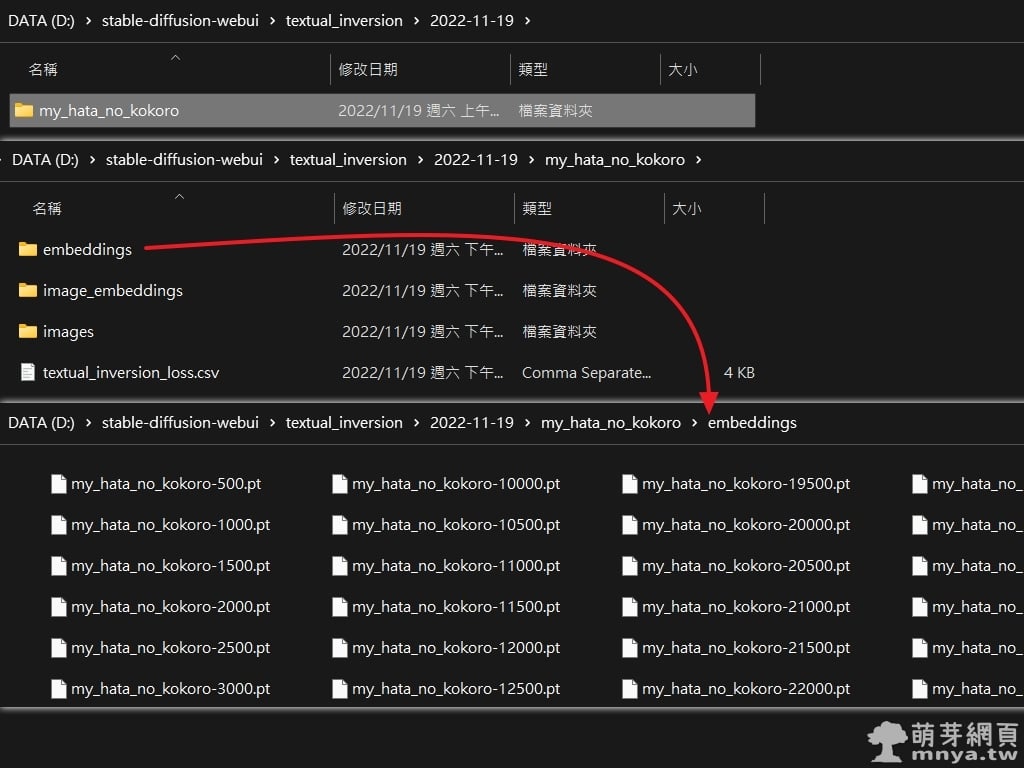

▲ 預設日誌在軟體根目錄下的 textual_inversion 中,會用日期再分子目錄,進入後才是小模型名稱的目錄,embeddings 目錄放的是指定每幾步(預設 500 步)產生的 .pt 小模型。

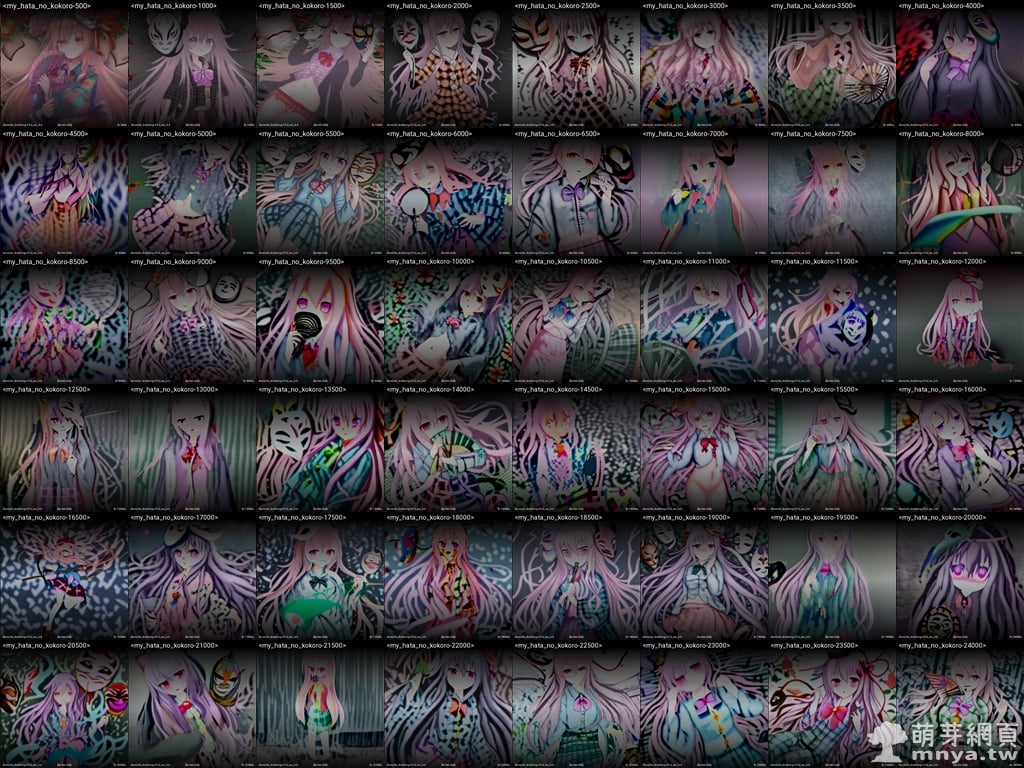

▲ image_embeddings 目錄中放的是指定每幾步(預設 500 步)產生的帶資訊預覽圖,可以看到訓練步數、大模型名稱等。

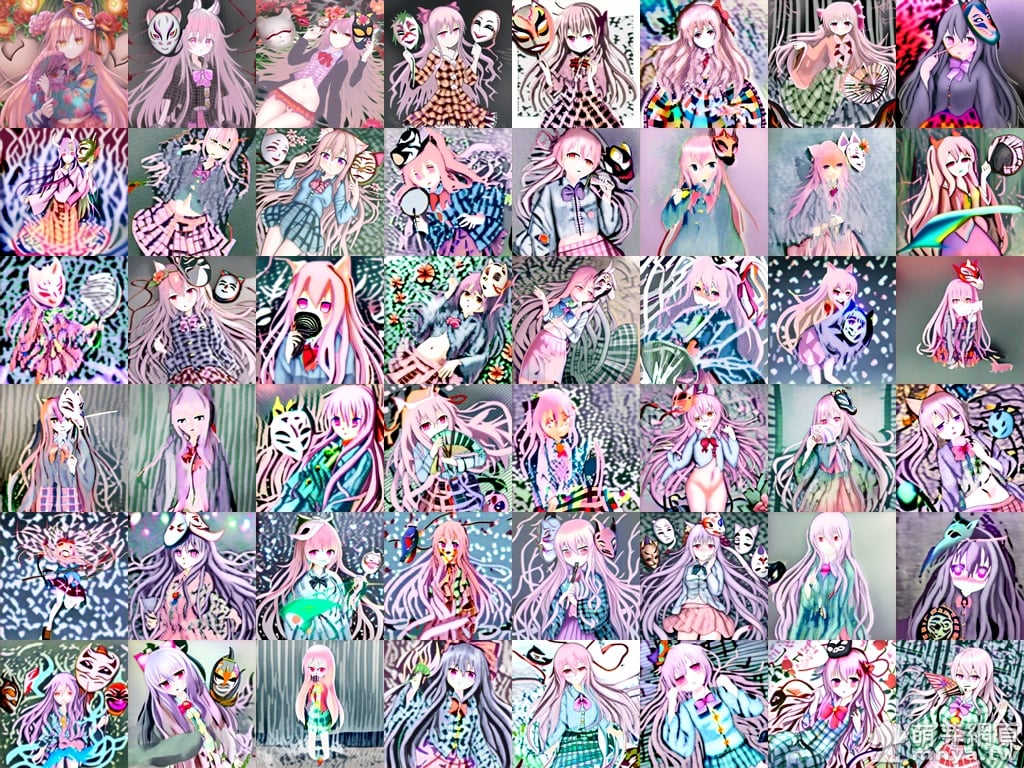

▲ images 目錄中放的是指定每幾步(預設 500 步)產生的純預覽圖,這邊可直接與小模型檔案對照,基本上預覽圖的樣子就會是最後輸出圖時產生的樣子(風格),此次訓練樣本不足,光從訓練預覽圖就能看出成果不佳。看樣子一千步以內就應該要有好成果才是的,沒必要花數個小時跑數萬步,後面 AI 幾乎都在鬼畫符。

日誌中還有個 textual_inversion_loss.csv 檔案存訓練完整過程記錄,我此次的結果如下:

step,epoch,epoch_step,loss,learn_rate

500,31,4,0.1647969,0.005

1000,62,8,0.2035500,0.005

1500,93,12,0.2492173,0.005

2000,124,16,0.2201941,0.005

2500,156,4,0.1637333,0.005

3000,187,8,0.1807594,0.005

3500,218,12,0.1986931,0.005

4000,249,16,0.2221759,0.005

4500,281,4,0.1647516,0.005

5000,312,8,0.2199931,0.005

5500,343,12,0.2097027,0.005

6000,374,16,0.2210557,0.005

6500,406,4,0.2075510,0.005

7000,437,8,0.2123710,0.005

7500,468,12,0.1762823,0.005

8000,499,16,0.1987181,0.005

8500,531,4,0.2082459,0.005

9000,562,8,0.1563430,0.005

9500,593,12,0.2780591,0.005

10000,624,16,0.1042284,0.005

10500,656,4,0.1799174,0.005

11000,687,8,0.1734964,0.005

11500,718,12,0.1933257,0.005

12000,749,16,0.1942165,0.005

12500,781,4,0.2264900,0.005

13000,812,8,0.1952641,0.005

13500,843,12,0.2060619,0.005

14000,874,16,0.1838281,0.005

14500,906,4,0.2413094,0.005

15000,937,8,0.1837390,0.005

15500,968,12,0.1961761,0.005

16000,999,16,0.2100048,0.005

16500,1031,4,0.2307835,0.005

17000,1062,8,0.2229874,0.005

17500,1093,12,0.1425070,0.005

18000,1124,16,0.1935754,0.005

18500,1156,4,0.2664926,0.005

19000,1187,8,0.1649749,0.005

19500,1218,12,0.2017491,0.005

20000,1249,16,0.1665867,0.005

20500,1281,4,0.2032003,0.005

21000,1312,8,0.1803317,0.005

21500,1343,12,0.1528136,0.005

22000,1374,16,0.1886314,0.005

22500,1406,4,0.2475957,0.005

23000,1437,8,0.2256665,0.005

23500,1468,12,0.2189753,0.005

24000,1499,16,0.1916140,0.005

24500,1531,4,0.2441203,0.005

25000,1562,8,0.1791483,0.005

25500,1593,12,0.2624347,0.005

26000,1624,16,0.2054515,0.005

26500,1656,4,0.1581340,0.005

27000,1687,8,0.1625501,0.005

27500,1718,12,0.1753392,0.005

28000,1749,16,0.2129618,0.005

28500,1781,4,0.2082969,0.005

29000,1812,8,0.1905257,0.005

29500,1843,12,0.1830073,0.005

30000,1874,16,0.2080867,0.005

30500,1906,4,0.1818547,0.005

31000,1937,8,0.1985296,0.005

31500,1968,12,0.0997733,0.005

32000,1999,16,0.1918320,0.005

32500,2031,4,0.2425240,0.005

33000,2062,8,0.2196417,0.005

33500,2093,12,0.1554626,0.005

34000,2124,16,0.2303733,0.005

34500,2156,4,0.2551335,0.005

35000,2187,8,0.1528614,0.005

35500,2218,12,0.2851300,0.005

36000,2249,16,0.2048200,0.005

36500,2281,4,0.2500226,0.005

37000,2312,8,0.2086859,0.005

37500,2343,12,0.2030108,0.005

38000,2374,16,0.2803172,0.005

38500,2406,4,0.1784415,0.005

39000,2437,8,0.2218448,0.005

39500,2468,12,0.1876105,0.005

40000,2499,16,0.2078659,0.005

40500,2531,4,0.2258463,0.005

41000,2562,8,0.1942652,0.005

41500,2593,12,0.1979953,0.005

42000,2624,16,0.1963333,0.005

42500,2656,4,0.2295723,0.005

43000,2687,8,0.1491647,0.005

43500,2718,12,0.1874174,0.005

44000,2749,16,0.2135164,0.005

44500,2781,4,0.1912760,0.005

45000,2812,8,0.2121909,0.005

45500,2843,12,0.2123150,0.005

46000,2874,16,0.1703888,0.005

46500,2906,4,0.1589555,0.005

47000,2937,8,0.1886290,0.005

47500,2968,12,0.2408557,0.005

48000,2999,16,0.2309446,0.005

48500,3031,4,0.2120837,0.005

49000,3062,8,0.2263282,0.005

49500,3093,12,0.2022610,0.005

50000,3124,16,0.2112575,0.005

50500,3156,4,0.2046367,0.005

51000,3187,8,0.1858108,0.005

51500,3218,12,0.2216587,0.005

52000,3249,16,0.1881346,0.005

52500,3281,4,0.1518442,0.005

53000,3312,8,0.1657947,0.005

53500,3343,12,0.1596016,0.005

54000,3374,16,0.1784721,0.005

54500,3406,4,0.2079419,0.005

55000,3437,8,0.2748984,0.005

55500,3468,12,0.2360647,0.005

56000,3499,16,0.2020658,0.005

56500,3531,4,0.2267685,0.005

57000,3562,8,0.2305008,0.005

57500,3593,12,0.2363006,0.005

58000,3624,16,0.1643513,0.005

58500,3656,4,0.2207141,0.005

59000,3687,8,0.2271157,0.005

59500,3718,12,0.1778646,0.005

60000,3749,16,0.1307331,0.005

60500,3781,4,0.1949560,0.005

61000,3812,8,0.1287404,0.005

61500,3843,12,0.1676540,0.005

62000,3874,16,0.2264707,0.005

62500,3906,4,0.1729074,0.005

63000,3937,8,0.2436761,0.005

63500,3968,12,0.1786481,0.005

64000,3999,16,0.1733526,0.005

64500,4031,4,0.2205692,0.005

65000,4062,8,0.1744124,0.005

65500,4093,12,0.1799495,0.005

66000,4124,16,0.1751718,0.005

66500,4156,4,0.2459914,0.005

67000,4187,8,0.2141965,0.005

67500,4218,12,0.1900976,0.005

68000,4249,16,0.2245717,0.005

68500,4281,4,0.2090053,0.005

69000,4312,8,0.2112840,0.005

69500,4343,12,0.2295332,0.005

70000,4374,16,0.2774963,0.005

70500,4406,4,0.1632809,0.005

跑了超過十個小時,共七萬步,但結果很不理想,所以不是訓練越久越好,還是取決於原始樣本及初始提詞好壞等,真是一門學問。

雖然知道結果可能不好,但還是要用文生圖試試看小模型的成果啦!這邊統一用同個種子碼及提詞跑秦心。

提詞:a photo of a my_hata_no_kokoro, masterpiece, best quality, best quality,Amazing,beautiful detailed eyes,1girl, solo,finely detail,Depth of field,extremely detailed CG unity 8k wallpaper, solo focus,(Touhou Project),(((hata_no_kokoro))), looking at viewer, pink hair, long hair, hair between eyes, pink eyes, mask on head, fox mask, expressionless, dark green black plaid shirt, plaid, pink bowtie, multicolored buttons, long sleeves, orange skirt, loli.

※ 紅色部分是放小模型的名稱喔!小模型預設放軟體根目錄的 embeddings 目錄中,我生成的是 my_hata_no_kokoro.pt,可以與日誌中的替換使用,記得名字要改好為 my_hata_no_kokoro 即可(EX: 500 步的小模型要拿來用,日誌目錄中原來是 my_hata_no_kokoro-500.pt,改成 my_hata_no_kokoro.pt 再搬過去軟體根目錄的 embeddings 目錄內取代原檔即可)。

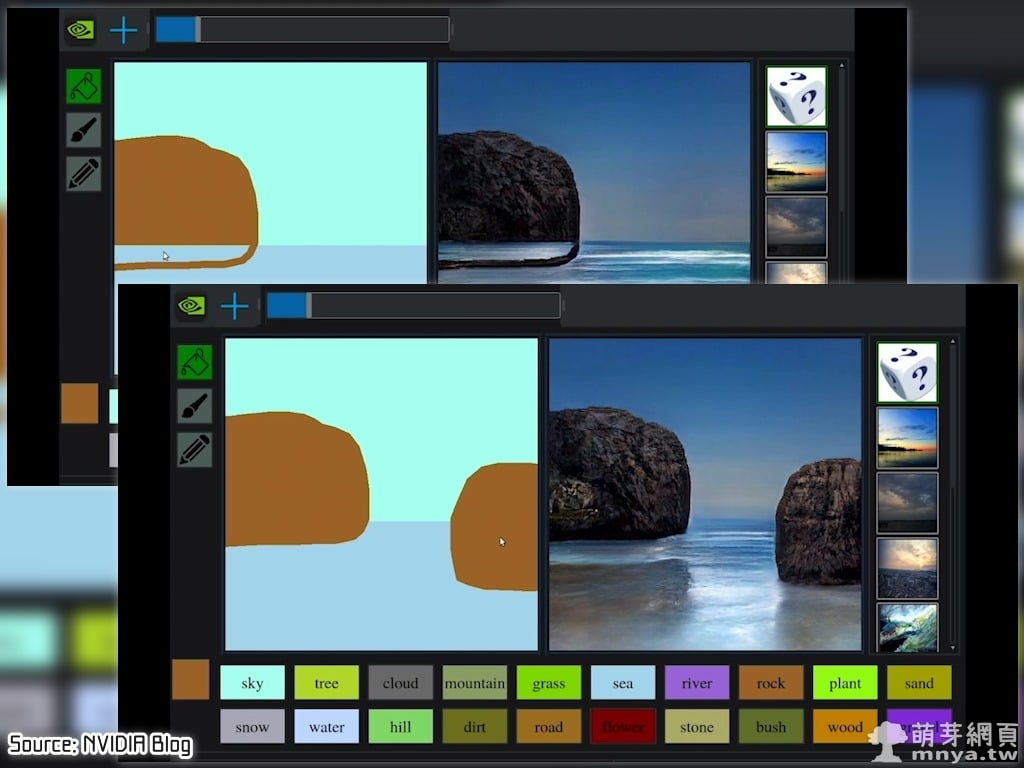

▲ 左為完全不用小模型的結果,右為訓練 500 步小模型的輸出結果,很大程度改變了樣貌。

▲ 再來是 1000 及 1500 步。

▲ 2000 及 5500 步。

▲ 43500 及 70626 步。

最後跑到 70626 步被我中斷,共計十一小時,當作實驗,寶貴經驗分享給大家了!善用能讓 AI 什麼都能畫出來喔!👍😁 好可惜還是沒能畫出超美的秦心~應該還是我找的素材不夠好,量不夠多,未來再努力研究了!

《上一篇》Stable Diffusion web UI x Anything:本機 AI 生成超高品質二次元動漫角色

《上一篇》Stable Diffusion web UI x Anything:本機 AI 生成超高品質二次元動漫角色  《下一篇》Stable Diffusion web UI 訓練超網路(Hypernetwork)教學,讓 AI 學習畫風!

《下一篇》Stable Diffusion web UI 訓練超網路(Hypernetwork)教學,讓 AI 學習畫風!

留言區 / Comments

萌芽論壇